We often hear our customers say that their mainframe legacy applications have been superseded because they cannot evolve quickly enough to support the changing needs of the business. More than anything, this appears to be a key driving force behind the dramatic upswing in interest companies have to move their applications onto other platforms; either by rewriting existing applications or, as in the case with our customers, re-platforming them.

Behind the headline agility issues with mainframe application modernisation, there is important detail that needs to be discussed. Why is it so difficult to modernise mainframe applications? It is surely not the programming language. COBOL, and other traditional languages, are just that, programming languages. Any self-respecting programmer is used to dealing with multiple languages. COBOL may not be “cool”, but neither are many of the other scripts, languages, or configuration syntax programmers deal with day-in, day-out.

We have spoken to several groups; customers, outsourcers and systems integrators in order to get to the heart of the legacy application modernisation challenge. The same four issues come up again and again:

1. Development Tooling – Low Productivity on Mainframes

The traditional environment for a programmer, working with COBOL or PL/1 on the mainframe, has few of the productivity features today’s programmers expect: smart cross-references, on-the-fly syntax checking, autocomplete for variables, and so on . Attempts have been made by some vendors, via proprietary tools, to bring the experience closer to that of a modern JAVA programmer, but there is no escaping the fact that a physical mainframe exists in the background with all its quirks. This divergence in programmer productivity tends to stick, unfairly, to the dominant programming languages on the mainframe such as COBOL and PL/1; resulting in language choice becoming conflated with developer tooling in decisions to make mainframe development more agile.

2. Open-Source Availability – Limited Access to Innovation

Finding solutions to business requirements, among the many popular open-source projects (100M repositories exist on Github), is one of the fundamental reasons why development processes in general have become more agile.

Clearly, the fewer lines of code that must be written to support any requirement, the faster that requirement will be satisfied. Very few open-source projects have been implemented and tested on legacy mainframes. Perhaps even more troubling is the difficulty of integrating a Linux-oriented open-source project with legacy applications in a cost-effective and natural way.

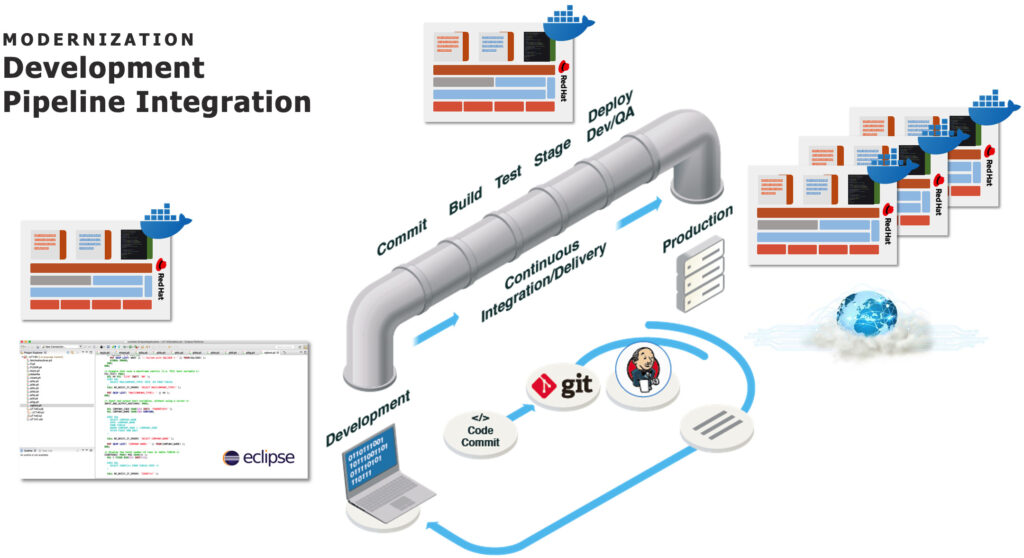

3. Pipeline Integration – Separate Pipeline and Processes for Mainframe Development

In a similar vein to developer tooling, modern development pipelines do not fit easily with the way mainframe applications move from development into production. Build processes, testing (covered in the next section), source-code management, promotion, integration and deployment are streamlined within a modern development pipeline. Specifically, modern tools enable developers to promote locally-tested changes and have those changes flow through an automated testing pipeline, with steadily increasing levels of integration with existing applications and data.

The pipeline is facilitated by the tight integration of tools like GIT, Eclipse, Jenkins, Containers etc. to leverage the best practises of Continuous Integration/Continuous Deployment (CI /CD). All working in harmony with an agile development organisation to get application updates to production faster. The mainframe does not enjoy this efficiency, mainly due to a lack of support for many of these technologies, alongside the costs of running many of the automated processes repeatedly.

4. Testing – Limited Autonomy and Automation, and Significant Expense

Testing, as part of a modern development pipeline is a highly automated, continuous and scalable process.

The ability to launch containers with a single click, including all application dependencies, at any testing stage, is a fundamental reason why development is faster today than in the mainframe era.

Development expectations for testing extend to being able to launch an effective testing environment on the developer workstation, without recourse to systems administrators, for the recurring small unit tests that are intertwined with the development itself. Due to the physical hardware dependency of mainframe development, this testing autonomy, which provides optimal agility, is simply impossible for legacy applications.

Furthermore, large scale performance and regression tests, which can be regularly, automatically and cost-effectively scheduled in modern, container-based cloud environments, require extensive budgeting and planning for mainframe-dependant legacy applications.

Consequently, all testing of legacy application development is extended by the need to involve mainframe systems administrators, and schedule expensive mainframe resources.

How LzLabs Software Defined Mainframe® (LzSDM) Solves These Issues

At its most fundamental level, LzSDM breaks the software lock-in that ties a legacy application to a mainframe computer. It does this by replacing the proprietary, mainframe hardware and software dependencies with a set of Linux libraries written by LzLabs.

Specifically, these libraries include features that faithfully recreate the behaviours of the operating system, subsystems, databases, security, and language runtimes that previously tied the application to the mainframe hardware. Also included is the ability to handle instruction-set translation for dependencies that will inevitably exist on programs available as object-code only.

Once the mainframe environment is represented as a set of libraries, in the same way as a more conventional application dependency, then the real problem of legacy application development is solved; only the rather specious question of programming language remains. Any workstation or commodity server can now fully virtualise the environment necessary to execute the legacy applications being modernised. Using containers and cloud compute capacity, multiple parallel instances of the environment can be scheduled at practically unlimited scale.

Stated differently, using LzSDM it is possible for a developer to spin up a full test environment for mainframe PL/1 or COBOL applications on their workstation, in the same way as they would for a Java or C# program. The entire development cycle for legacy applications can follow exactly the same pipeline as for other applications. The same agile models and CI/CD DevOps policies, with strong emphasis on “Shift-Left Testing”, can be employed. The same automated scale testing is possible. The same exploitation of open-source projects can be considered.

Ultimately, once re-hosted to LzSDM, the word “legacy” can be dropped from the description of these applications. They simply become applications written in COBOL or PL/1. Everything else about their ongoing enhancement is identical to those applications written in languages more commonly associated with modern development.